The rise of AI and chatGPT has sparked a global revolution. Robotics has become the talk of the town, with tech companies investing heavily to develop top-notch humanoid robots. For years, learning has remained the ultimate goal in the field of robotics.

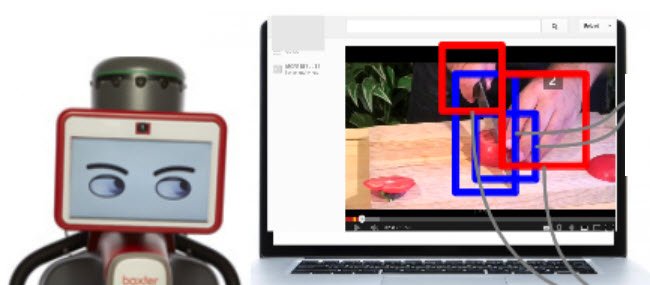

Robotic systems must go beyond following instructions; they need to continuously learn and adapt to survive. The quest for precise robots has led to the exploration of various avenues. Learning from videos has emerged as a fascinating solution, capturing the spotlight in recent years. One such innovation is the Vision-Robotic Bridge (VRB), an evolution of the CMU-developed algorithm called WHIRL, which trains robotic systems by observing humans perform tasks in recorded videos.

Engineers at Carnegie Mellon University (CMU) have developed a model that allows robots to perform household tasks exactly like humans. With the VRB technique, robots can adeptly open drawers and handle knives after watching videos showcasing these actions. This groundbreaking approach requires no human oversight and enables robots to learn new skills in a mere 25 minutes.

By identifying key contact points and understanding the motions involved, robots can replicate actions demonstrated in videos, even in different settings. The VRB technique equips robots with the knowledge of how humans interact with various objects. Consequently, robots can now successfully complete similar tasks in diverse environments.

The CMU team has utilized extensive video datasets, including Epic Kitchen and Ego4D, to enhance accuracy and expand training capabilities. These datasets offer a wealth of egocentric videos depicting daily activities from around the world. This vast collection empowers robots to curiously explore their surroundings and interact with greater precision.

During 200 hours of real-world testing, robots selected for the research successfully learned 12 new tasks. While these tasks were relatively straightforward, such as opening a can or picking up a phone, researchers aim to further develop the VRB system to enable robots to perform more complex, multi-step tasks.

The researchers’ findings are set to be presented in a paper titled “Affordances from human videos as a versatile representation for robotics,” scheduled for presentation at the Conference of Vision and Pattern Recognition in Vancouver, Canada, later this month. This innovative approach holds tremendous potential for enabling robots to leverage the vast resources of the internet and YouTube videos for continuous learning and improvement.